Here are Facebook’s Community Standards on “Hate Speech”

Hate Speech

Facebook removes hate speech, which includes content that directly attacks people based on their:

- Race,

- Ethnicity,

- National origin,

- Religious affiliation,

- Sexual orientation,

- Sex, gender, or gender identity, or

- Serious disabilities or diseases.

Organizations and people dedicated to promoting hatred against these protected groups are not allowed a presence on Facebook.

People can use Facebook to challenge ideas, institutions, and practices. Such discussion can promote debate and greater understanding. Sometimes people share content containing someone else’s hate speech for the purpose of raising awareness or educating others about that hate speech. When this is the case, we expect people to clearly indicate their purpose, which helps us better understand why they shared that content.

We allow humor, satire, or social commentary related to these topics, and we believe that when people use their authentic identity, they are more responsible when they share this kind of commentary. For that reason, we ask that Page owners associate their name and Facebook Profile with any content that is particularly cruel or insensitive, even if that content does not violate our policies. As always, we urge people to be conscious of their audience when sharing this type of content.

While we work hard to remove hate speech, we also give you tools to avoid distasteful or offensive content. Learn more about the tools we offer to control what you see. You can also use Facebook to speak up and educate the community around you. Counter-speech in the form of accurate information and alternative viewpoints can help create a safer and more respectful environment.

Hit read more below to see Facebook’s Community Standards in full

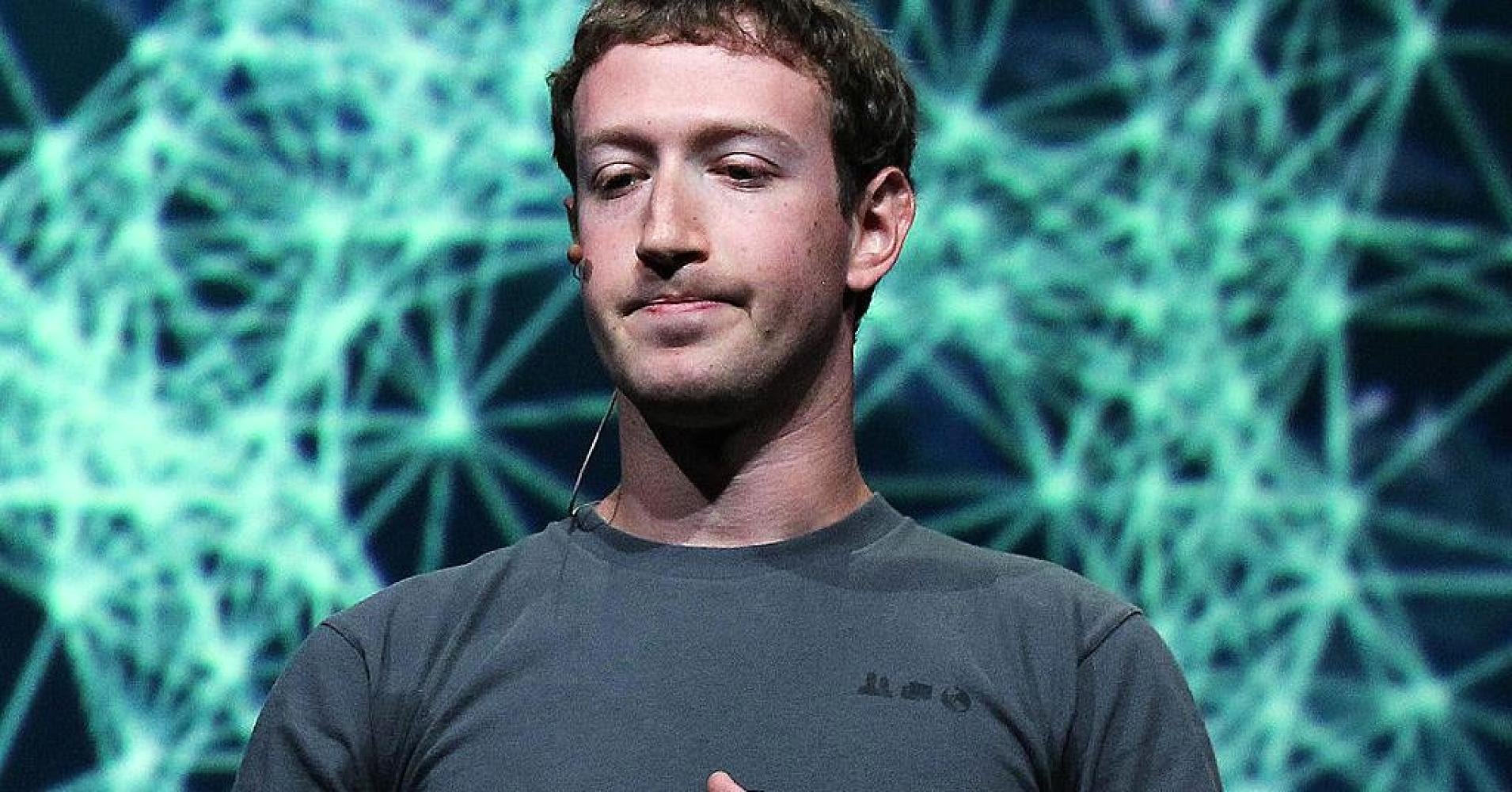

Facebook has been making the same apology for how they enforce their community standards for years

Facebook Apologizes After Report Shows Inconsistencies In Removing Hate Speech

Digital Trends

Facebook has taken plenty of criticism on the platform’s algorithms designed to keep content within the community guidelines, but a new round of investigative reporting suggests the company’s team of human review staff could see some improvements too

Social Contact